When Is Correlation Transitive? clipping

September 16th, 2019

Given two unit vectors  in a real inner product space, one can define the correlation between these vectors to be their inner product

in a real inner product space, one can define the correlation between these vectors to be their inner product  , or in more geometric terms, the cosine of the angle

, or in more geometric terms, the cosine of the angle  subtended by

subtended by  and

and  . By the Cauchy-Schwarz inequality, this is a quantity between

. By the Cauchy-Schwarz inequality, this is a quantity between  and

and  , with the extreme positive correlation

, with the extreme positive correlation  occurring when

occurring when  are identical, the extreme negative correlation

are identical, the extreme negative correlation  occurring when

occurring when  are diametrically opposite, and the zero correlation

are diametrically opposite, and the zero correlation  occurring when

occurring when  are orthogonal. This notion is closely related to the notion of correlation between two non-constant square-integrable real-valued random variables

are orthogonal. This notion is closely related to the notion of correlation between two non-constant square-integrable real-valued random variables  , which is the same as the correlation between two unit vectors

, which is the same as the correlation between two unit vectors  lying in the Hilbert space

lying in the Hilbert space  of square-integrable random variables, with

of square-integrable random variables, with  being the normalisation of

being the normalisation of  defined by subtracting off the mean

defined by subtracting off the mean  and then dividing by the standard deviation of

and then dividing by the standard deviation of  , and similarly for

, and similarly for  and

and  .

.

subtended by

subtended by

One can also define correlation for complex (Hermitian) inner product spaces by taking the real part  of the complex inner product to recover a real inner product.

of the complex inner product to recover a real inner product.

While reading the (highly recommended) recent popular maths book "How not to be wrong", by my friend and co-author Jordan Ellenberg, I came across the (important) point that correlation is not necessarily transitive: if  correlates with

correlates with  , and

, and  correlates with

correlates with  , then this does not imply that

, then this does not imply that  correlates with

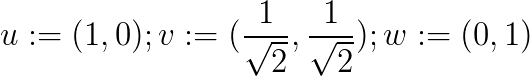

correlates with  . A simple geometric example is provided by the three unit vectors

. A simple geometric example is provided by the three unit vectors

in the Euclidean plane  :

:  and

and  have a positive correlation of

have a positive correlation of  , as does

, as does  and

and  , but

, but  and

and  are not correlated with each other. Or: for a typical undergraduate course, it is generally true that good exam scores are correlated with a deep understanding of the course material, and memorising from flash cards are correlated with good exam scores, but this does not imply that memorising flash cards is correlated with deep understanding of the course material.

are not correlated with each other. Or: for a typical undergraduate course, it is generally true that good exam scores are correlated with a deep understanding of the course material, and memorising from flash cards are correlated with good exam scores, but this does not imply that memorising flash cards is correlated with deep understanding of the course material.

However, there are at least two situations in which some partial version of transitivity of correlation can be recovered. The first is in the "99%" regime in which the correlations are very close to  : if

: if  are unit vectors such that

are unit vectors such that  is very highly correlated with

is very highly correlated with  , and

, and  is very highly correlated with

is very highly correlated with  , then this does imply that

, then this does imply that  is very highly correlated with

is very highly correlated with  . Indeed, from the identity

. Indeed, from the identity

(and similarly for  and

and  ) and the triangle inequality

) and the triangle inequality

we see that

Thus, for instance, if  and

and  , then

, then  . This is of course closely related to (though slightly weaker than) the triangle inequality for angles:

. This is of course closely related to (though slightly weaker than) the triangle inequality for angles:

and

and  , then

, then  . This is of course closely related to (though slightly weaker than) the triangle inequality for angles:

. This is of course closely related to (though slightly weaker than) the triangle inequality for angles:

Remark 1 (Thanks to Andrew Granville for conversations leading to this observation.) The inequality (1) also holds for sub-unit vectors, i.e. vectorswith

. This comes by extending

in directions orthogonal to all three original vectors and to each other in order to make them unit vectors, enlarging the ambient Hilbert space

if necessary. More concretely, one can apply (1) to the unit vectors

in.

But even in the " " regime in which correlations are very weak, there is still a version of transitivity of correlation, known as the van der Corput lemma, which basically asserts that if a unit vector

" regime in which correlations are very weak, there is still a version of transitivity of correlation, known as the van der Corput lemma, which basically asserts that if a unit vector  is correlated with many unit vectors

is correlated with many unit vectors  , then many of the pairs

, then many of the pairs  will then be correlated with each other. Indeed, from the Cauchy-Schwarz inequality

will then be correlated with each other. Indeed, from the Cauchy-Schwarz inequality

, then many of the pairs

, then many of the pairs

we see that

Thus, for instance, if  for at least

for at least  values of

values of  , then (after removing those indices

, then (after removing those indices  for which

for which  )

)  must be at least

must be at least  , which implies that

, which implies that  for at least

for at least  pairs

pairs  . Or as another example: if a random variable

. Or as another example: if a random variable  exhibits at least

exhibits at least  positive correlation with

positive correlation with  other random variables

other random variables  , then if

, then if  10,000}" />, at least two distinct

10,000}" />, at least two distinct  must have positive correlation with each other (although this argument does not tell you which pair

must have positive correlation with each other (although this argument does not tell you which pair  are so correlated). Thus one can view this inequality as a sort of `pigeonhole principle" for correlation.

are so correlated). Thus one can view this inequality as a sort of `pigeonhole principle" for correlation.

for at least

for at least  , then (after removing those indices

, then (after removing those indices  )

)  must be at least

must be at least  for at least

for at least  , then if

, then if  10,000}" />, at least two distinct

10,000}" />, at least two distinct

A similar argument (multiplying each  by an appropriate sign

by an appropriate sign  ) shows the related van der Corput inequality

) shows the related van der Corput inequality

and this inequality is also true for complex inner product spaces. (Also, the  do not need to be unit vectors for this inequality to hold.)

do not need to be unit vectors for this inequality to hold.)

Geometrically, the picture is this: if  positively correlates with all of the

positively correlates with all of the  , then the

, then the  are all squashed into a somewhat narrow cone centred at

are all squashed into a somewhat narrow cone centred at  . The cone is still wide enough to allow a few pairs

. The cone is still wide enough to allow a few pairs  to be orthogonal (or even negatively correlated) with each other, but (when

to be orthogonal (or even negatively correlated) with each other, but (when  is large enough) it is not wide enough to allow all of the

is large enough) it is not wide enough to allow all of the  to be so widely separated. Remarkably, the bound here does not depend on the dimension of the ambient inner product space; while increasing the number of dimensions should in principle add more "room" to the cone, this effect is counteracted by the fact that in high dimensions, almost all pairs of vectors are close to orthogonal, and the exceptional pairs that are even weakly correlated to each other become exponentially rare. (See this previous blog post for some related discussion; in particular, Lemma 2 from that post is closely related to the van der Corput inequality presented here.)

to be so widely separated. Remarkably, the bound here does not depend on the dimension of the ambient inner product space; while increasing the number of dimensions should in principle add more "room" to the cone, this effect is counteracted by the fact that in high dimensions, almost all pairs of vectors are close to orthogonal, and the exceptional pairs that are even weakly correlated to each other become exponentially rare. (See this previous blog post for some related discussion; in particular, Lemma 2 from that post is closely related to the van der Corput inequality presented here.)

, then the

, then the  are all squashed into a somewhat narrow cone centred at

are all squashed into a somewhat narrow cone centred at

A particularly common special case of the van der Corput inequality arises when  is a unit vector fixed by some unitary operator

is a unit vector fixed by some unitary operator  , and the

, and the  are shifts

are shifts  of a single unit vector

of a single unit vector  . In this case, the inner products

. In this case, the inner products  are all equal, and we arrive at the useful van der Corput inequality

are all equal, and we arrive at the useful van der Corput inequality

of a single unit vector

of a single unit vector (In fact, one can even remove the absolute values from the right-hand side, by using (2) instead of (4).) Thus, to show that  has negligible correlation with

has negligible correlation with  , it suffices to show that the shifts of

, it suffices to show that the shifts of  have negligible correlation with each other.

have negligible correlation with each other.

Here is a basic application of the van der Corput inequality:

Proposition 2 (Weyl equidistribution estimate) Letbe a polynomial with at least one non-constant coefficient irrational. Then one has

where.

Note that this assertion implies the more general assertion

for any non-zero integer  (simply by replacing

(simply by replacing  by

by  ), which by the Weyl equidistribution criterion is equivalent to the sequence

), which by the Weyl equidistribution criterion is equivalent to the sequence  being asymptotically equidistributed in

being asymptotically equidistributed in  .

.

being asymptotically equidistributed in

being asymptotically equidistributed in

Proof: We induct on the degree  of the polynomial

of the polynomial  , which must be at least one. If

, which must be at least one. If  is equal to one, the claim is easily established from the geometric series formula, so suppose that

is equal to one, the claim is easily established from the geometric series formula, so suppose that  1}" /> and that the claim has already been proven for

1}" /> and that the claim has already been proven for  . If the top coefficient

. If the top coefficient  of

of  is rational, say

is rational, say  , then by partitioning the natural numbers into residue classes modulo

, then by partitioning the natural numbers into residue classes modulo  , we see that the claim follows from the induction hypothesis; so we may assume that the top coefficient

, we see that the claim follows from the induction hypothesis; so we may assume that the top coefficient  is irrational.

is irrational.

is rational, say

is rational, say

In order to use the van der Corput inequality as stated above (i.e. in the formalism of inner product spaces) we will need a non-principal ultrafilter  (see e.g this previous blog post for basic theory of ultrafilters); we leave it as an exercise to the reader to figure out how to present the argument below without the use of ultrafilters (or similar devices, such as Banach limits). The ultrafilter

(see e.g this previous blog post for basic theory of ultrafilters); we leave it as an exercise to the reader to figure out how to present the argument below without the use of ultrafilters (or similar devices, such as Banach limits). The ultrafilter  defines an inner product

defines an inner product  on bounded complex sequences

on bounded complex sequences  by setting

by setting

by setting

by setting

Strictly speaking, this inner product is only positive semi-definite rather than positive definite, but one can quotient out by the null vectors to obtain a positive-definite inner product. To establish the claim, it will suffice to show that

for every non-principal ultrafilter  .

.

Note that the space of bounded sequences (modulo null vectors) admits a shift  , defined by

, defined by

This shift becomes unitary once we quotient out by null vectors, and the constant sequence  is clearly a unit vector that is invariant with respect to the shift. So by the van der Corput inequality, we have

is clearly a unit vector that is invariant with respect to the shift. So by the van der Corput inequality, we have

for any  . But we may rewrite

. But we may rewrite  . Then observe that if

. Then observe that if  ,

,  is a polynomial of degree

is a polynomial of degree  whose

whose  coefficient is irrational, so by induction hypothesis we have

coefficient is irrational, so by induction hypothesis we have  for

for  . For

. For  we of course have

we of course have  , and so

, and so

. Then observe that if

. Then observe that if  is a polynomial of degree

is a polynomial of degree  for

for  , and so

, and so

for any  . Letting

. Letting  , we obtain the claim.

, we obtain the claim.

, we obtain the claim.

, we obtain the claim. Measure

Measure

Keep in touch!