Nassim Nicholas Taleb looks at the risks threatening humanity clipping

July 25th, 2017

Summary: How to deal with risks dominates our headlines, usually driven by single-interest groups that see only their favorite threat. Statistician Nassim Nicholas Taleb’s latest work offers a way to identify the most serious threats facing us, and determine how much we should spend to fight each of them. It has received much attention. Is it useful? Part one of two.

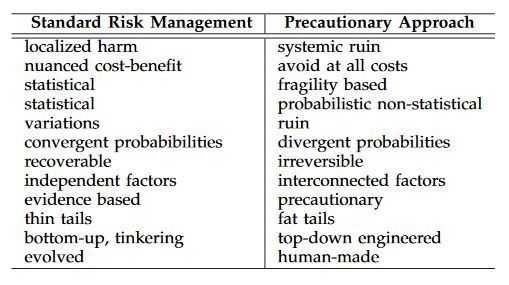

A series of papers by Nassim Nicholas Taleb et al made a large contribution to our understanding of risk: The Precautionary Principle within the statistical and probabilistic structure of "ruin" problems. The main paper is "The Precautionary Principle (with Application to the Genetic Modification of Organisms)", well-worth reading for anyone interested in GMOs or risk analysis. I will not attempt to summarize it. I will point out one aspect of relevance to many of the key challenges of our time: how should policy-makers allocate funds to prevent or mitigate shockwave threats — potentially disastrous but of low or certain probabilities?

Excerpt: What is a "ruin" scenario, and how should we respond to them?

"We believe that the PP should be evoked only in extreme situations: when the potential harm is systemic (rather than localized) and the consequences can involve total irreversible ruin, such as the extinction of human beings or all life on the planet.

"A ruin problem is one where outcomes of risks have a non-zero probability of resulting in unrecoverable losses. …In biology, an example would be a species that has gone extinct. For nature, ‘ruin’ is ecocide: an irreversible termination of life at some scale, which could be planetwide.

"…Our concern is with public policy. …Policy makers have a responsibility to avoid catastrophic harm for society as a whole; the focus is on the aggregate, not at the level of single individuals, and on global-systemic, not idiosyncratic, harm. This is the domain of collective ‘ruin’ problems.

"…For example, for humanity global devastation cannot be measured on a scale in which harm is proportional to level of devastation. The harm due to complete destruction is not the same as 10 times the destruction of 1/10 of the system. As the percentage of destruction approaches 100%, the assessment of harm diverges to infinity (instead of converging to a particular number) due to the value placed on a future that ceases to exist.

"Because the ‘cost’ of ruin is effectively infinite, cost-benefit analysis (in which the potential harm and potential gain are multiplied by their probabilities and weighed against each other) is no longer a useful paradigm. Even if probabilities are expected to be zero but have a non-zero uncertainty, then a sensitivity analysis that considers the impact of that uncertainty results in infinities as well. The potential harm is so substantial that everything else in the equation ceases to matter. In this case, we must do everything we can to avoid the catastrophe.

"…If the consequences are systemic, the associated uncertainty of risks must be treated differently than if it is not. In such cases, precautionary action is not based on direct empirical evidence but on analytical approaches based upon the theoretical understanding of the nature of harm. It relies on probability theory without computing probabilities. The essential question is whether or not global harm is possible or not."

———————— End Excerpt. ————————

Public policy implications of "ruin" scenarios

Taleb explains that "ruin" events must be defended against "at all costs …Because the ‘cost’ of ruin is effectively infinite …we must do everything we can to avoid the catastrophe." This is operationally useless since there are many shockwave scenarios with ruinous outcomes.

By his theory, the relevant expenditure required is that required to "defend against" all of them. I will mention just two threats to illustrate this. First, the oceans are dying, with potentially ruinous consequences for humanity. See the Ocean Health Index; see the jellyfish warnings; see some of the many warnings about this problem.

Second, the Earth has been hit by asteroids and comets in the past, with ruinous consequences — sometimes exterminating the world’s major lifeforms. It will happen again. For details see these posts. Oddly, Taleb mentions the history of extinction-level events from asteroid and comet impacts, but does not mention why this kind of ruin event should not become a major public policy concern. It exactly meets his formal definition. (Similarly he writes about the odds of a Third World War, but does not discuss the "ruin" scenario of global nuclear war.)

After we’ve funded every ruin scenario "at all costs", we have to spend more to prepare for the merely awful scenarios, such as earthquakes, tsunamis, pandemics (like the flu), and famines. Then there are more exotic threats, such as a reversal of Earth’s magnetic field, eruption of the Yellowstone supervolcano, continental destruction from other volcanoes, peak fresh water, and other shockwave events. We cannot fund vast sums on all of them.

How can policy-makers allocate resources across such a broad spectrum of threats? The recommendations of Taleb’s methodology provide less help than the simple and objective — albeit imperfect — the usual comparisons of probability, cost, and risk.

Tomorrow: Taleb warns us about climate change.

A super-volcano will erupt again, eventually.

Another perspective on risk

"Therefore I tell you, do not be anxious about your life …which of you by being anxious can add a single hour to his span of life? …seek first the kingdom of God and his righteousness …Therefore do not be anxious about tomorrow, for tomorrow will be anxious for itself. Sufficient for the day is its own trouble."— Matthew 6:25-34.

Keep in touch!